Hearing in Noise: Comparing Multi-Stream Architecture and Deep Neural Networks in Hearing Aids

The study done by Signia Hearing Technologies titled “Conversations in Noise: Multi-Stream Architecture vs. Deep Neural Network Approach to Hearing Aids” compares two advanced hearing aid technologies designed to improve speech understanding in noisy environments. Multi-Stream Architecture (MSA) with RealTime Conversation Enhancement (RTCE) and Deep Neural Network (DNN)-based noise reduction.

Understanding the Technologies

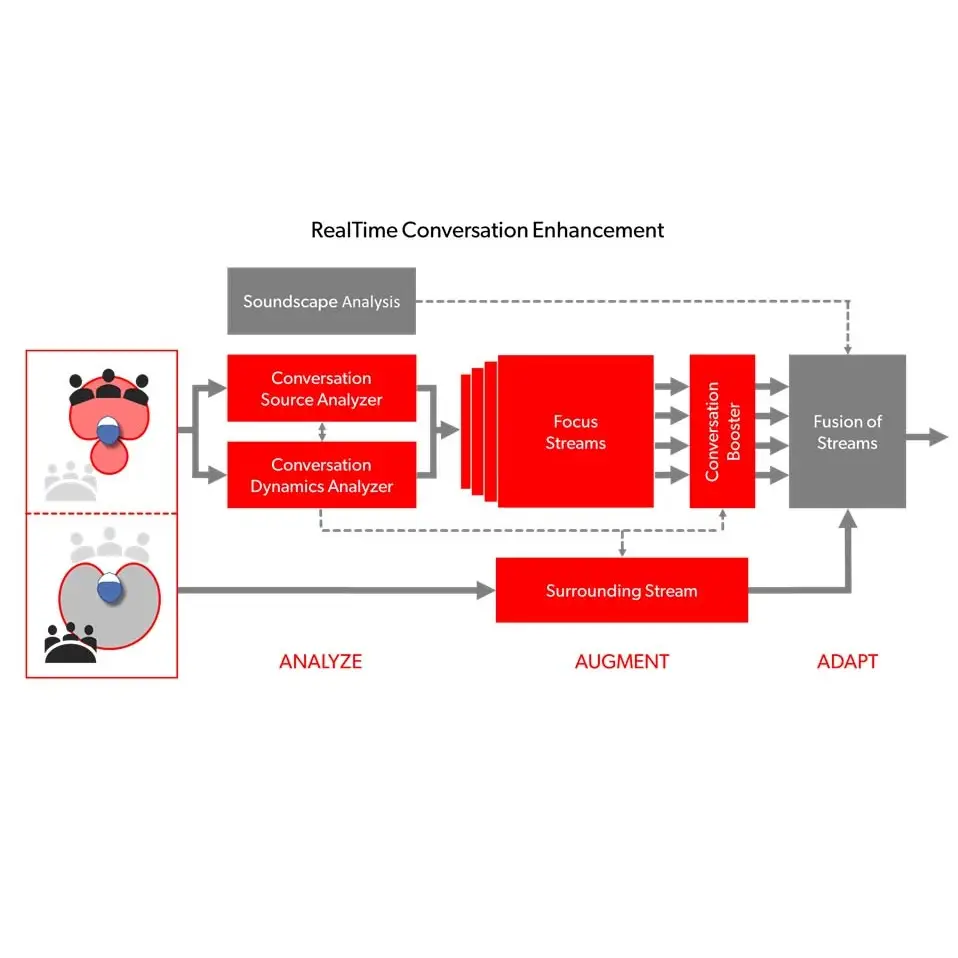

Multi-Stream Architecture (MSA) with RTCE

This approach, utilized in Signia’s Integrated Xperience (IX) hearing aids, processes sounds from different directions separately. It splits incoming sounds into two streams—front and sides/back—and applies distinct processing to each. This allows the device to enhance speech from the front while reducing background noise from other directions. The RTCE component further analyzes the environment to detect and focus on multiple conversation partners. Even as they move the narrow beams are being direcfted towards each speaker.

Deep Neural Network (DNN)-Based Noise Reduction

DNN technology, similar to technology in (Oticon hearing aid) employs artificial intelligence to learn and distinguish speech from noise by analyzing vast amounts of data. Once trained, the system applies this knowledge to real-world situations, aiming to enhance speech clarity in various noisy environments. However, its effectiveness can vary depending on the complexity of the environment and the quality of the training data.

Study Methodology

The study involved 20 older adults with mild-to-severe hearing loss, all native English speakers. Participants were fitted with two types of hearing aids: one featuring MSA with RTCE and another utilizing DNN-based noise reduction. In a controlled environment simulating a group conversation with three speakers positioned at different angles, participants’ speech understanding was tested across various noise levels. The setup included multiple loudspeakers to create a realistic acoustic scene. Participants were unaware of which hearing aid they were using at any given time to prevent bias.

Key Findings

Neural Indicators: Measurements showed that MSA with RTCE led to enhanced brain responses related to speech processing and reduced signs of listening fatigue.

Speech Understanding: Hearing aids with MSA and RTCE demonstrated superior performance in enhancing speech clarity in noisy, multi-speaker environments compared to those with DNN-based noise reduction.

Listening Effort: Participants reported reduced listening effort when using the MSA with RTCE technology, indicating a more comfortable listening experience.

Conclusion

While both technologies aim to improve speech understanding in noisy settings, the study suggests that MSA with RTCE currently offers more effective real-time adaptation to dynamic conversations. Especially in group settings with multiple speakers. RTCE’s ability to focus on and enhance multiple conversation partners simultaneously provides a significant advantage over DNN-based approaches. DNN approaches may struggle in complex acoustic environments.

For a more detailed exploration of the study and its findings, you can read the full article here: Conversations in Noise: Multi-Stream Architecture vs. Deep Neural Network Approach to Hearing Aids.